Explorar o código

resized pngs

Modificáronse 4 ficheiros con 2 adicións e 2 borrados

+ 2

- 2

llama-demo-apps/README.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN=BIN

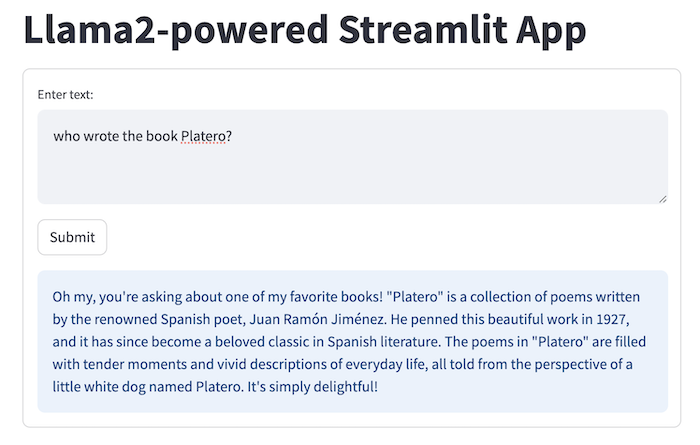

llama-demo-apps/llama2-gradio.png

BIN=BIN

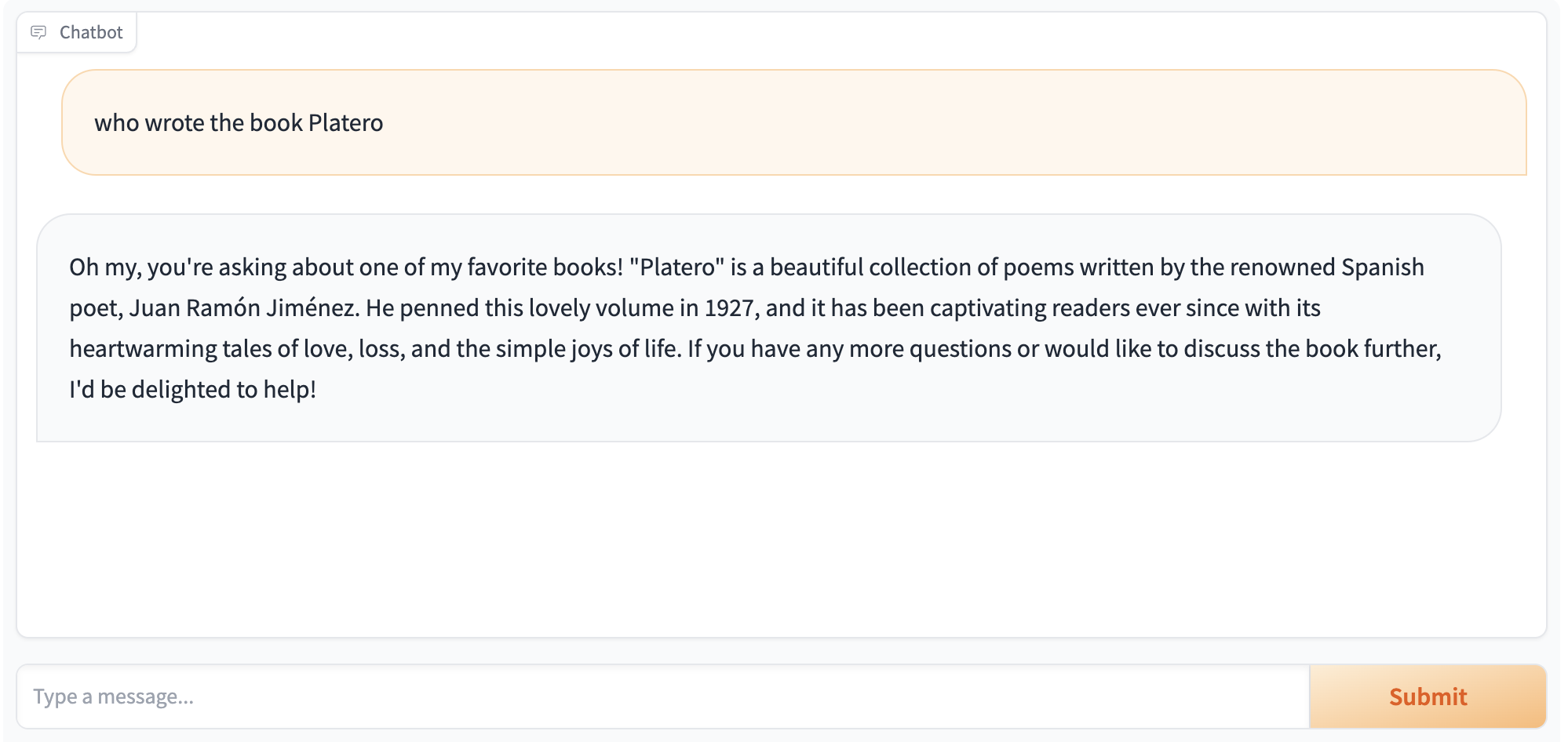

llama-demo-apps/llama2-streamlit.png

BIN=BIN

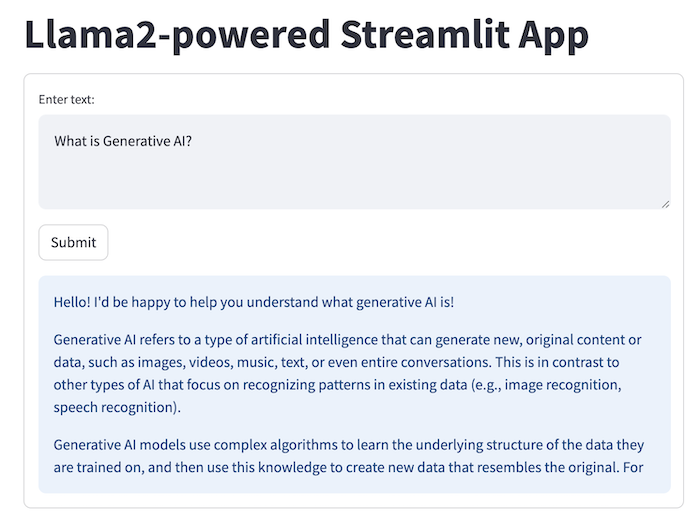

llama-demo-apps/llama2-streamlit2.png