Prechádzať zdrojové kódy

updated README.md for llama-demo-apps

6 zmenil súbory, kde vykonal 209 pridanie a 0 odobranie

+ 104

- 0

llama-demo-apps/Llama2_Gradio.ipynb

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

Rozdielové dáta súboru neboli zobrazené, pretože súbor je príliš veľký

+ 83

- 0

llama-demo-apps/README.md

BIN

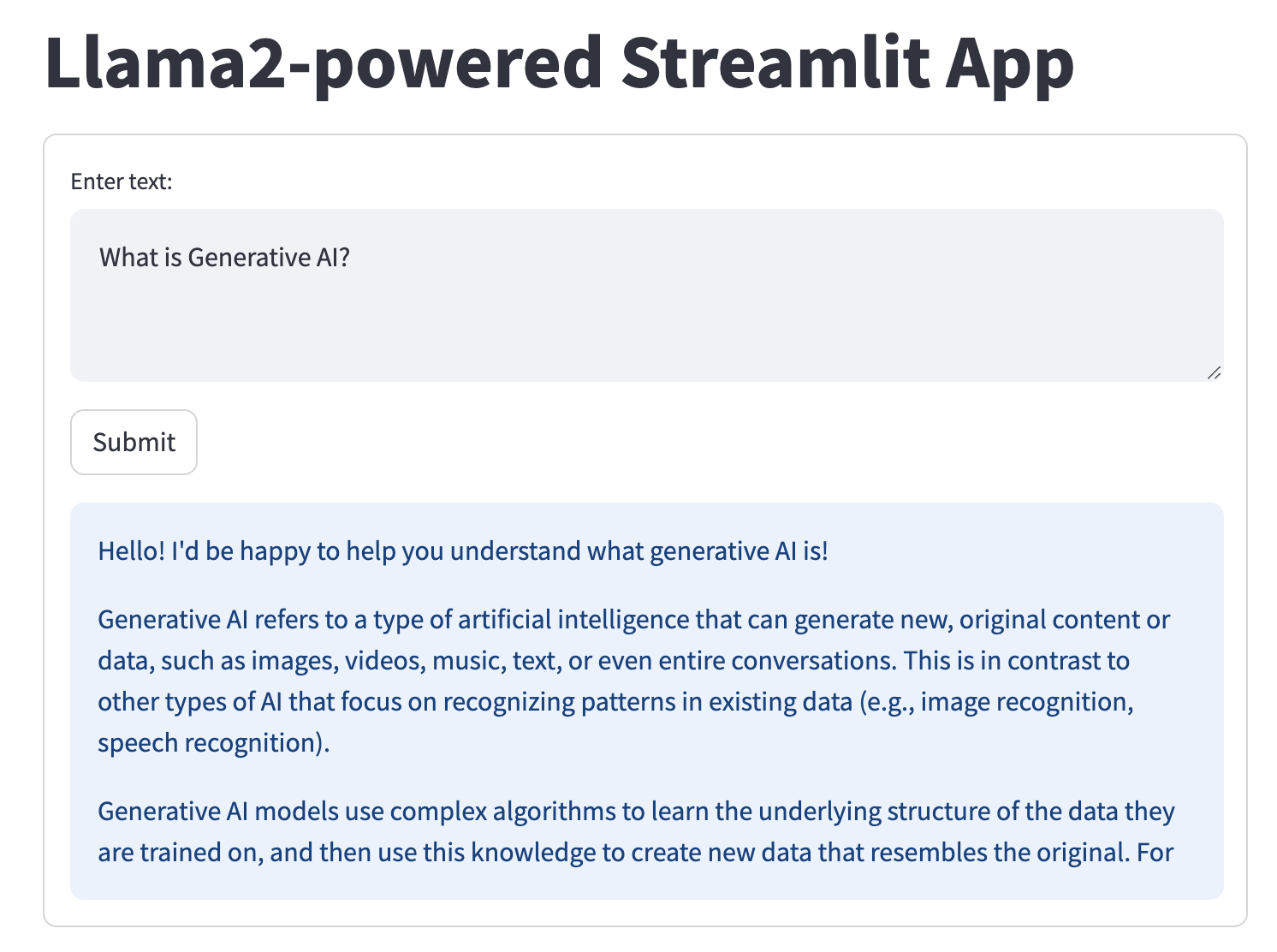

llama-demo-apps/llama2-streamlit.png

BIN

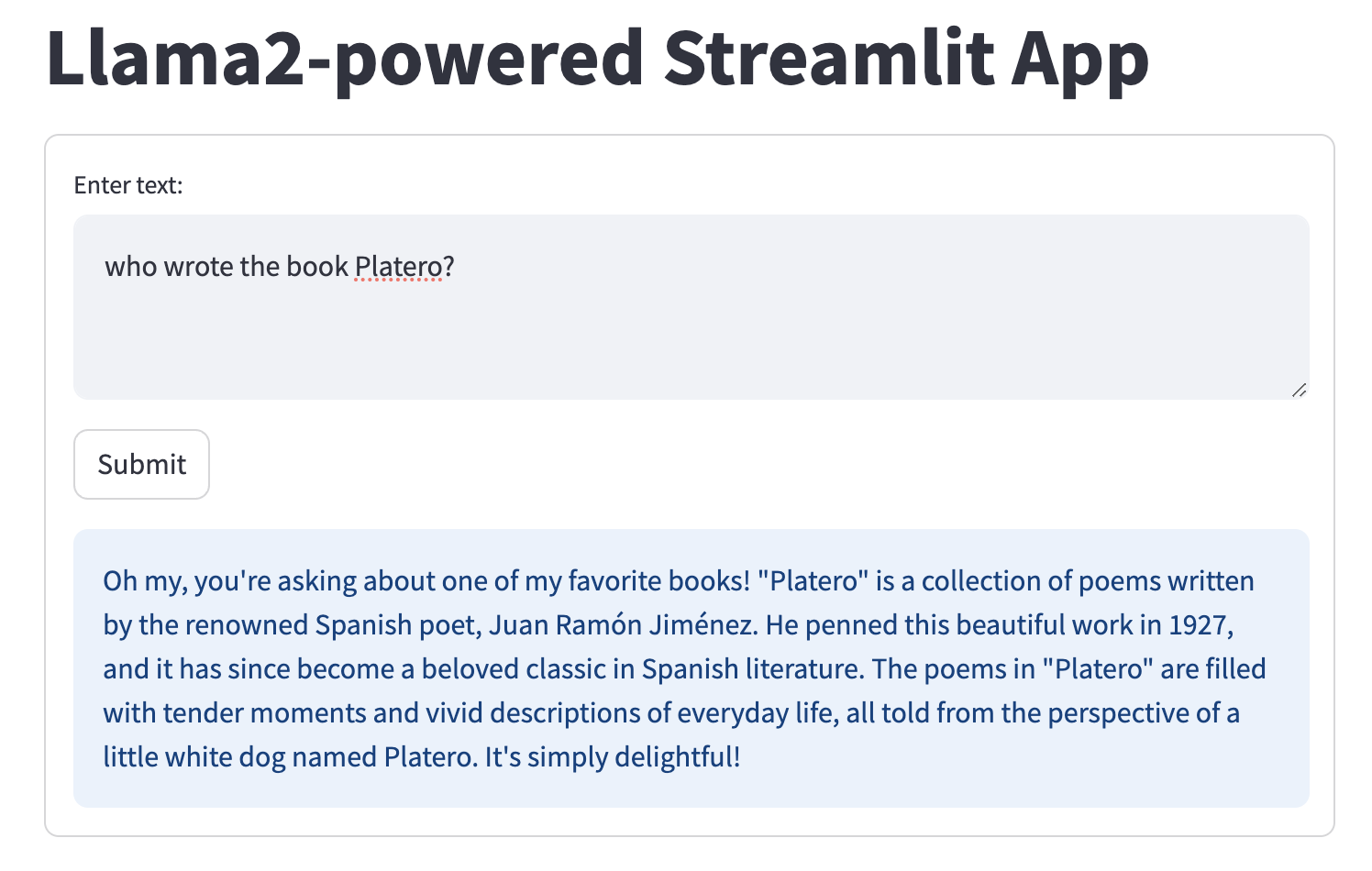

llama-demo-apps/llama2-streamlit2.png

BIN

llama-demo-apps/llm_log1.png

+ 22

- 0

llama-demo-apps/streamlit_llama2.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||