Sfoglia il codice sorgente

FMBench: benchmarking Llama models on AWS (#452)

7 ha cambiato i file con 406 aggiunte e 1 eliminazioni

File diff suppressed because it is too large

+ 133

- 0

recipes/benchmarks/fmbench/README.md

+ 259

- 0

recipes/benchmarks/fmbench/config.yml

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

BIN

recipes/benchmarks/fmbench/img/CFT.png

BIN

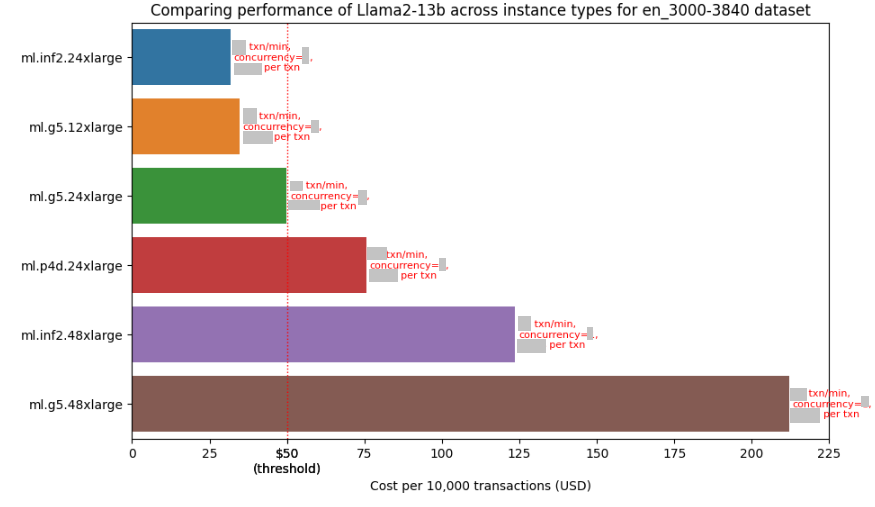

recipes/benchmarks/fmbench/img/instances.png

BIN

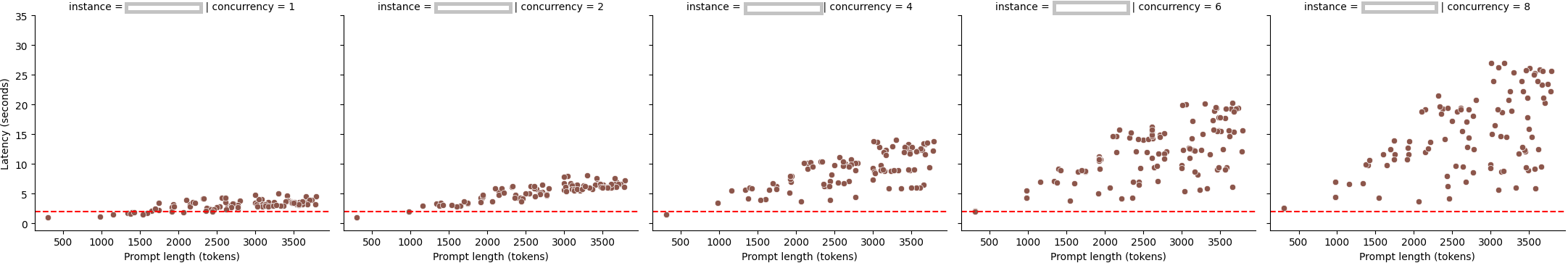

recipes/benchmarks/fmbench/img/latency_vs_tokens.png

File diff suppressed because it is too large

+ 1

- 1

recipes/inference/model_servers/llama-on-prem.md

+ 13

- 0

scripts/spellcheck_conf/wordlist.txt

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||